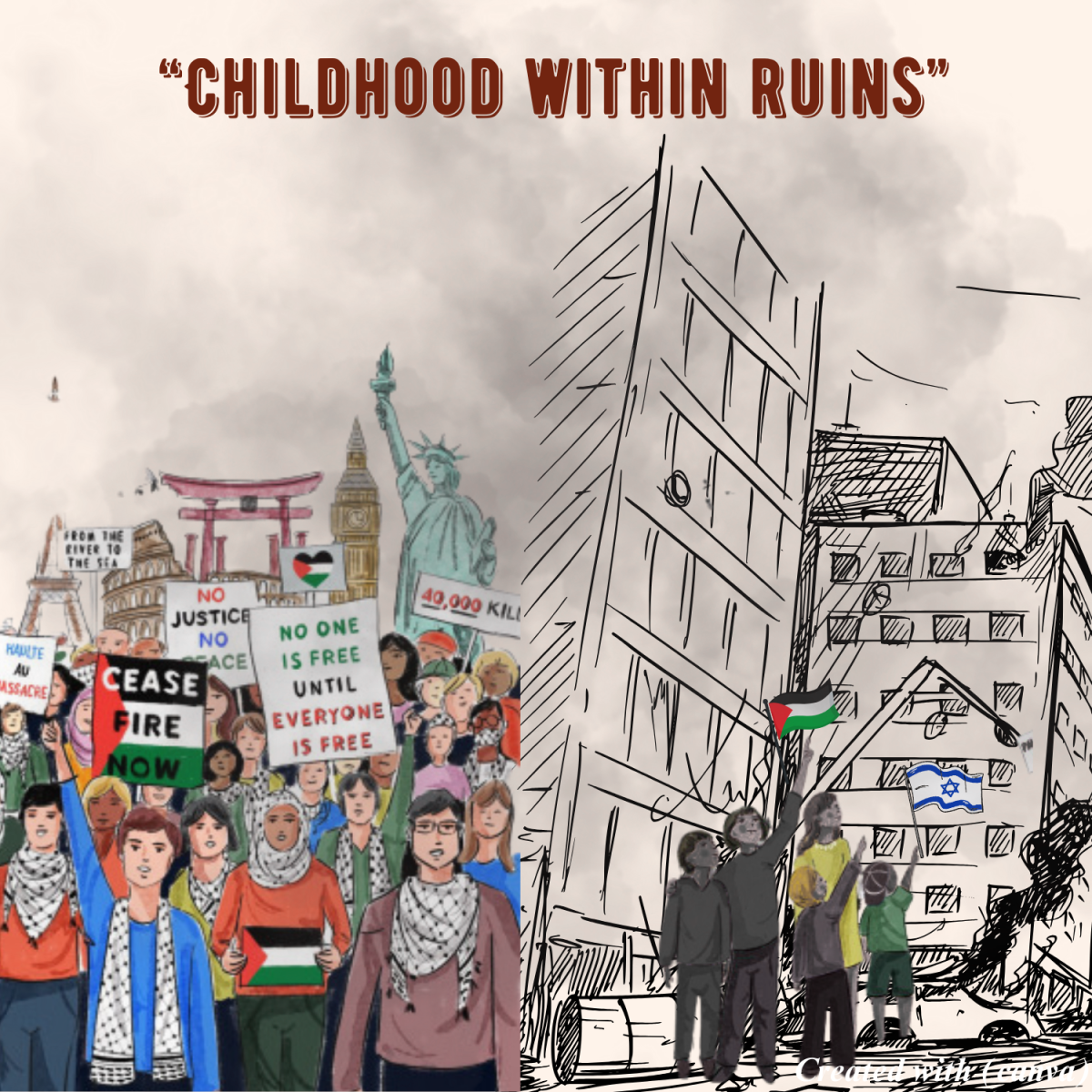

In a digital age where artificial intelligence is reshaping political discourse, a recent AI-generated video titled “Future of Gaza,” created by Israeli-American filmmakers Solo Avital and Ariel Vromen of Eyemix Immersive Visuals, later released by President Trump on his Truth Social platform on February 26, 2025, has ignited widespread debate. The video, depicting an idealized vision of Gaza’s future, challenges the boundaries of ethical storytelling and visual communication in a conflict-ridden region.

The release of this video raises significant questions about the ethical use of AI in crafting political narratives. Critics argue that portraying a utopian Gaza risks oversimplifying the complex realities faced by its inhabitants, potentially misleading audiences about ongoing humanitarian challenges. As AI technology increasingly influences media creation, the responsibility of ensuring that these narratives are accurate and ethically sound becomes crucial. This evolving landscape underscores the need for careful balance between innovation and ethical standards as digital storytelling continues to shape public perceptions of sensitive geopolitical issues.

Video History

The “Future of Gaza” video, crafted by Israeli-American filmmakers Solo Avital and Ariel Vromen, was initially intended as a satirical exploration of the region’s potential future. Avital and Vromen, known for their innovative use of AI in visual storytelling, sought to challenge viewers’ perceptions by presenting a radically transformed Gaza. However, once the video was released by President Trump on Truth Social, it became a lightning rod for controversy, drawing attention to the potential for AI-generated media to misrepresent reality.

Critics have argued that the video’s depiction of a prosperous, modernized Gaza starkly contrasts with the grim realities faced by its residents. This has raised concerns about the potential for such media to mislead audiences and oversimplify complex geopolitical realities.

Freshman and Rhyme & Arts oil painter, Diana Granados, shares her perspective on the video’s impact.

“The video starts off with children being led away by soldiers … the sudden transition to Trump … the use of gold when portraying him, because the gold is kind of seen as a status symbol,” Granados said.

Granados points out that this imagery diminishes the real struggles of Gaza’s residents and projects an unrealistic vision that could mislead viewers about the actual conditions on the ground. The video’s creators intended to spark conversation, but many feel the message was lost in translation.

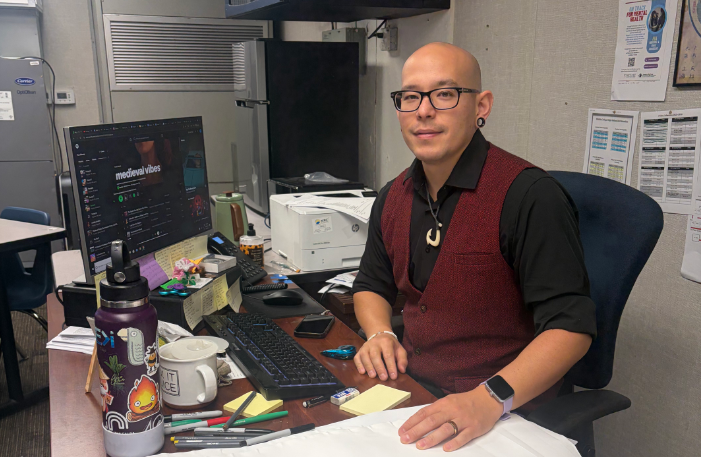

Peter Weitzner, a professor of visual communications and media studies at Santa Ana College and Chapman University, shares his insights on this depiction.

“Such projects need a clear context to ensure that the message isn’t lost or misinterpreted,” Weitzner said.

Visual Communication Analysis

Visual communication is a powerful tool in shaping public perception, particularly when AI-generated content is involved. The use of vivid imagery and symbolic elements in the “Future of Gaza” video has been criticized for potentially distorting reality. Experts, like Weitzner, emphasize the importance of creation to prevent misinterpretation and ensure that complex issues are represented fairly.

“I think that when portraying something like that, you definitely need to do your own research beforehand … be aware of what elements of art you’re using when actually creating it, as misleading visuals can have a profound impact in viewers’ understanding, especially in a region as complex as Gaza,” Weitzner said.

The ethical responsibility of media creators extends beyond artistic expression, it requires a commitment to presenting balanced and informed narratives that do not oversimplify or mislead. This is particularly crucial when addressing politically sensitive topics.

“The images can make people forget the real-life challenges that the residents of Gaza face every day, which is extremely insensitive,” Granados said.

Ethical Concerns

The ethical implications of using AI to craft political narratives are significant. Critics argue that AI-generated content can easily oversimplify complex geopolitical issues, potentially leading to misinformation and a skewed understanding or reality. This concern is particularly pertinent in the context of the Gaza conflict, where oversimplification can lead to a lack of understanding and empathy for those affected.

“Using AI just kind of like, it dismisses the issue. It doesn’t take it seriously, which is concerning,” Granados said.

Granados’ viewpoint highlights the broader worries about AI’s capacity to distort public understanding of sensitive geopolitical topics. The ethical responsibility lies in the hands of creators to ensure balanced and informed views are presented, rather than sensationalized visuals.

“Creators have a duty to present balanced and informed views, not just sensationalized visuals,” Weitzner said.

These concerns underscore the need for careful handling of such technologies, particularly when addressing politically sensitive topics. The use of AI in media requires a more nuanced approach that respects the complexity of the issues at hand.

Challenges & Implications

The use of AI in media presents both opportunities and challenges, particularly in how narratives are crafted and received. The potential for AI-generated content to influence public discourse is significant, raising questions about the role of technology in shaping societal narratives. As AI technology continues to advance, its ability to create realistic and persuasive media content grows, making it imperative for consumers to engage critically with the media they consume.

“The public needs to be informed, use multiple sources, and try to stay rational … that’s the biggest thing we lack today, right, is the lack of civil discourse. Engaging critically with media is crucial in an era where digital narratives can easily influence perceptions,” Weitzner said.

This showcases the importance of media literacy and critical engagement, encouraging audiences to critically assess the media they consume and seek diverse perspectives. The responsibility for navigating these challenges lies not only with creators but also with consumers, who must be vigilant and discerning in their media consumption.

“Consumers of the media have to be vigilant and question the narratives presented to them,” Granados said.

Impact on Public Perception

AI-generated content has a profound impact on how audiences understand and engage with political narratives. As digital storytelling evolves, ensuring ethical standards in media creation becomes increasingly important. The responsibility lies not only with creators but also with consumers to engage thoughtfully with the media. Understanding the power of visuals and their potential to shape perception is crucial for fostering informed and empathetic public discourse.

“Viewers need to be aware of the sources and of the content they consume and question the narratives being presented,” Granados said.

This insight serves as a reminder of the power and responsibility of media in shaping public discourse, urging both sides of creators and consumers to approach digital content with a critical eye. As AI technology continues to influence the media landscape, maintaining ethical standards in storytelling for ensuring that narratives are accurate and reflective.

“Both sides need to engage in a dialogue to ensure the media serves to inform and educate accurately,” Weitzner said.

The “Future of Gaza” video serves as a poignant example of the potential and pitfall of AI-generated media. As the conversation around AI and its role in political narratives continues, the need for ethical media practices remains crucial.